Rational Scientific Theories from Theism

Introduction •

Approaches •

Beginning • Source Books •

Courses • Basic

Principles • Consequences •

Resources • Site

|

Rational Scientific Theories from Theism

Introduction •

Approaches •

Beginning • Source Books •

Courses • Basic

Principles • Consequences •

Resources • Site |

|

Psychology Approach to Theistic Science Layered Cognitive NetworksAn architecture is proposed in which connectionist links and pattern-directed rules are combined in a unified framework, involving the combination of distinct networks in layers. Piaget's developmental psychology is used to suggest specific semantic contents for the individual layers. IntroductionIn cognitive psychology there appears to be a creative tension between models that use connections of a network, and models that use rules for symbol manipulation. The idea of a connectionist network goes back to McCulloch & Pitts [1943] and Hebb [1949], and finds recent revival in the 'parallel distributed processing' (PDP) models that have been extensively examined in the last few years (see e.g. Rumelhart et al. [1986]). In the intervening years, however, the predominant explanations of psychology have been in terms of rules for the manipulation of symbolic structures (e.g. since Newell & Simon [1963]). Because of the tension between these different approaches (see e.g. Fodor & Pylyshyn [1988]), there is great interest in the formulation of 'hybrid systems' which combine connectionist components with traditional symbolic processing. I attempt below to outline the general architecture of such a hybrid system.The primary difference between connectionist and symbolic processing, I argue (with Fodor & Pylyshyn [1988]), is not the existence of symbols, but rather the existence of rules with general pattern-based applicability. The reason for this is that connectionist systems can readily model the presence of symbols by, for example, having links from all the occurrences of each symbol to one central 'symbol table' node. (This is in fact the way that symbols are implemented in list-processing systems). Thus symbolic structures, semantic nets for example, can be generally modelled as kinds of connectionist systems. Semantic nets (see Quillian [1968/1980], Schank [1973,1975], Simmons [1973] and Findler [1979]) are an attempt to represent the meanings of sentences by means of a formal network structure. Various forms of these structures are possible (for recent systemisations see Brachman [1979] or Sowa [1984]), but typically objects are represented by nodes in a network, their relations by arcs between the nodes, and inferences involving them by production rules which manipulate the network. The nodes and arcs are usually labelled with English words we can recognise. Strictly, however, this should not be necessary as the semantic net is an attempt to represent the whole meaning of a sentence or concept by its position in an extensive network, and by operations on that network. That is, attempts are made to define meanings entirely in terms of relations and operations in a formal network. Once this is done, the original semantic net will have become a connectionist system. In this transformation of semantic nets into connectionist networks, the place of the rules remains problematic. In the original semantic net, it is often thought that rules can be included on an equal footing with relations, because production rules can be explicitly included as kinds of nodes or substructures in the network (as in Anderson [1983]). Specific interpretive mechanisms might still be needed, however, to apply the 'rule' components to the 'data' components of the network. Alternatively, the rules could be converted into certain links in the connectionist network, for example along the lines of Rumelhart & McClelland [1986a] or Hinton & Lang [1985]. The 'application' of a 'rule' for a given pattern in the network then amounts to the activation of that node by reason of the activation of the suitable pattern of nodes linked explicitly to the 'rule node'. I now argue, however, that this is in general an inadequate account of rules, and that an extended connectionist architecture is necessary to take them properly into account. As a specific but simple example, consider the recognition of a first four-leaf clover. No such object will ever have been seen before, yet the idea-node for 'four' will clearly have to be activated for a collection of objects that may never have occurred together in the system. It would appear that the activation of the node for 'four' has to be activated by a rule or a function that counts whatever nodes are activated, and not just by those nodes with which it has been explicitly linked to in the past. A similar conclusion is reached from linguistic considerations in Lachter & Bever [1988]. It appears that the generality of application of rules is something that cannot be adequately captured in a purely connectionist network, although a weak imitation of it may be simulated in small specific problem domains. The ability to recognise new instances of a specific concept means that the concept-node cannot be activated by purely historical links, and means that some essential kind of rule-based operation will have to be built into the system. Once this is done, I argue that both the symbol and the processing features of traditional symbolic systems can be incorporated within connectionist networks. The architecture proposed to handle both connectionist links and pattern-directed rules involves layers of distinct networks, so that the relations within a layer are given explicitly by the links of the graph, whereas the relations between layers have a functional or rule-based interpretation. Specific types of semantic contents will be proposed for the distinct layers, guided to some extent by Piagetian theories of development. Further evidence for the specific contents will then be presented from logical, psychological and computational considerations. It should be pointed out, however, that the general layered architecture is compatible with a range of hypotheses concerning the specific contents of the various layers. Furthermore, because of the wide scope of the hypothesis, this paper should be regarded not as a minimal inductive generalisation of the evidence to hand, but as an exercise in theoretical psychology. Note that the 'layers' being proposed here are distinct networks, and should not be confused what we might call the different 'levels of explanation' for any given network. The layers here are like the distinct floors of a house. A house has different kinds of connections within layers and between layers. It is also possible to define the multiple 'levels' at which semantic networks may be considered (see e.g. Brachman [1979] and Brachman et al. [1985]). Brachman insists, for example, that the implementational, logical, knowledge (epistemological), conceptual and linguistic levels should not be conflated. These levels, however, are not the 'layers' of the present paper, as his levels merely different ways of looking at the operations of any given network. They like the parallel levels of hardware, logic gates, functional units, machine instructions, software etc., in the operation of a digital computer. The general idea of multiple layers has been around for some time: Greenwald [1988] surveys the support for what he calls 'levels of representation' in many different kinds of psychological theories (his 'levels' are here 'layers'). He also formulates his own system of layers: this will be examined in section 3.2 below. Our proposed 'layering hypothesis' has a broad scope, as it attempts to bring together and formalise disparate developments in cognitive psychology, developmental psychology, and in the computational modelling of knowledge. Rather than produce new experimental results bearing on the layering hypothesis, we have chosen to collect existing evidence from these different fields, and bring under one roof structures invented for a variety of purposes. Neither of us has produced a computational model embodying the layering hypothesis in a programmed form. This is because, I will argue, with the help of the hypothesis it is possible to pinpoint the most difficult areas in computational modelling and in AI as being precisely the succinct embodiment of realistic relations between the layers. Section 2 outlines the details of the layering hypothesis, and discusses specific proposals for the contents of the different layers, and how the relations between layers might be set up. Section 3 compares the layering hypothesis with other kinds of theories attempting to cover the same material. The initial PDP work in the area of connectionist systems (Rumelhart et al. [1986]), for example, treats all processes as the activity of one-layer 'neural networks'. Although the PDP work explores 'multi-level' networks as the generalisation of the original 'one-level' perceptrons, the networks are all within one layer in the sense of the present paper. This is because they have only fixed connections, and no functional or algorithmic operations. We must therefore consider to what extent these one-layer neural net models efficiently explain an adequate range of cognitive phenomena. A number of authors (e.g. Minsky [1975], Boden [1978]) have pointed out the extensive similarities between the enterprises of Piaget and of cognitive modelling, and suggest that closer cooperation should prove fruitful. Production systems have been applied to the analysis of specific Piagetian tasks by Baylor & Gascon [1974] and Young [1976], but only Minsky [1975], to my knowledge, as touched on the overall phenomenon of stages of development which Piaget has described. The psychology of development is relevant both to cognitive psychology and to the goals of artificial modelling of intelligence (AI) because an understanding of the order in which cognitive structures develop is an important guideline to simulating them realistically. Piaget [1926, 1962] has described several stages of cognitive development, and has characterised them by the performance (or otherwise) of a variety of simple tasks. In section 4.1, therefore, these tasks are summarised, and it is suggested how they lead to and interrelate with the proposed layering scheme. From the point of view of layering, section 4.2 will then examine the ways that AI workers have approached some of the problems in the computational representation of knowledge. It will be seen that, in the light of the deficiencies of the earlier formal schemes which assumed all the structures existed at 'one level', the more successful approaches have tended to build in the possibility of multilayer structures. Layered NetworksArchitectureThe hypothesis being suggested in this paper is that knowledge can be organised in specific stages, which correspond to separate layers in a semantic network, and that the connections within a network layer are qualitatively different from the correspondences and relations which hold between layers. Many different kinds of semantic and connectionist nets have been proposed in the literature, but I will argue that these are often best regarded as specific layers in the more general organisation.The task of the present paper is not to propose a detailed formalism, but to propose and examine the architecture of multiple layers. We take, therefore, individual layers to be network structures, the nodes of which represent discrete mental sensations or concepts. The contents or meanings of these sensations or concepts are thus defined by the relations to the other nodes, and thus recursively to the whole structure. Various psychological processes are then assumed to correspond to operations on the network. The recall in memory of associated meanings and associated episodes from the past, for example, can correspond to travelling around the network. A psychological model of attention could be based on giving each node an 'activation level', to be passed on to those other nodes with which it is connected, as in the 'spreading activation' models of Collins et al. [1975], or the more recent PDP models. A model of short-term memory could be based (see e.g. Cunningham [1972]) on regulating the total level of activation so that typically at most 7 +/- 2 nodes are active (Miller [1956]). The Layer Contents

Coming now to the specific stages of the layering hypothesis, nodes in the first layer (called layer 0) are taken to represent components of sensations: images, sounds, tastes, textures, etc., and their relations in the perceptual fields as well as in their temporal orders. Nodes in the next layer '1' then represent the concepts of material objects and their relations in space. The crux of the layering hypothesis is that the connections within the layers 0 and 1 are qualitatively different from the functional mechanisms needed to relate the two layers together. Support for this claim will be given from logical considerations (below), from the psychology of development (section 4.1), and from AI work (section 4.2). Because a given object can appear under very many sensory appearances, due to the possible operations of the continuous rotation and translation groups, not to mention ranges of lighting and occlusions, the relations between the concept of an object and its sensory appearances are quite different in character from the connections that exist between objects, or between appearances. It seems implausible that even a young child has a separate set of network connections for every rotation and translation, although such schemes have been proposed (see Hinton et al. [1985] and section 3.1 below). This implausibility suggests that the mechanism relating the two layers must be distinct from the explicit arc mechanism that relates nodes within a given layer. Section 2.3 will discuss how these new mechanisms between layers might operate. The layer '2' is assumed to represent the structure of events, episodes, single relations, and ideas of simple causality. The meanings represented in this layer are especially those of simple subject - verb - object sentences, using names which have been attached to the layer 1 concepts of objects. These names and other words must also be related to their pronounciations i.e. to sounds, which are layer 0 structures. This means that linguistic features are attached to all the three layers described so far, and that the correspondences between layers can, at least in part, be considered a linguistic phenomenom. Indeed, much of the initial impetus for the development of the theory of semantic nets came from Fillmore's [1968] work on case grammars. The layer 2 contains not only the logic for simple action sentences, but also the logic supporting ideas of time and causality. It might contain, for example, the logic of temporal successions (see e.g. Allen et al. [1985]), and the logic of 'naive causality' (see e.g. de Kleer et al. [1984]). The next layer '3' is used to describe various abstractions from what is concretely observable, such as classes, series, multiple relations, and numbers, along with their mathematical relationships. The ability to move freely backwards and forwards around the network of these concepts means that operations can be compared, reversed, and transitively compounded. Again it should be clear that the relation between the abstractions at this level and the structures at previous levels is not one of static connections by arcs in a network, but has a more dynamic or computational basis. It is implausible that there are connections between say, the number 'four' at this level and all the sets of four objects, four events, etc. Rather, there must be general processes whereby, whenever there are four things present and counting becomes required or relevant, the concept of 'four' is activated. From the logical viewpoint, a concept on the next layer must be defined intentionally (by some functional criterion), rather than extensionally (by giving the set of all configurations on the present layer which satisfy that criterion). The layer '4' describes and relates, as entities, whole sequential structures of possible operations. It deals with plans, scripts, programs (on computers and elsewhere), and in general, sets of possibilities that are not directly related to what is actually present. The final layer '5' is postulated to deal with formal structures as 'objects' in their own right. This allows interpretations, world-views, and general paradigms (see Kuhn [1970]) to be considered explicitly. It now becomes possible not only to use formal systems flexibly, but also to think about theories, to construct them, and to realise that formal theories are to some extent independent of the paradigms used to interpret them. From the logical point of view, it appears that the novelty in this stage is the construction of meta-theories. This enables intelligent control over the way the formal theories of the previous stage are formulated, used, and evaluated. Connections Within and Between LayersWithin a given layer, standard spreading-activation techniques can be used for searching the network structure to retrieve particular episodes, or to explore the conceptual associations from a given starting node. More complex processes, however, require at least some elementary kinds of rules. If these are available, then networks are capable of being progressively expanded according to given rules, as for example when planning for travelling, solving puzzles, or playing board games (see Newell et al. [1963]). These problems may be called 'combinatorial problems', since they involve essentially exploring successive combinations or concatenations of network arcs, and testing the proposed arrangements according to some criterion, until a particular set is found as the solution.There are two positions one can take concerning the rules needed for the manipulation of a given layer. They can be regarded as structures either in the same layer as their 'data', or in a different layer. If they are in the same layer, then computationally they are easier to model, but explicit interpretative mechanisms must be introduced to enable the 'rule structures' to be applied to their data. It is difficult to see how to do this if each layer is regarded as an unlabelled connectionist network. A stronger hypothesis would be to regard these rules as special cases of the inter-layer rules, as will be discussed in section 3.5. Between layers, modelling the connective mechanisms is not so easy. However, we can be guided initially by work in linguistics, because linguistic features are attached to nodes in the first three layers (0, 1 and 2), and hence we might look for parallels between the mechanisms which connect the conceptual layers and those which connect the linguistic layers. The relevant linguistic features are the sentence meanings of layer 2, the names attached to the layer 1 concepts of objects, and then the layer 0 structures of the pronounciations, sounds and written forms. Chomsky [1965] has proposed that 'transformational grammars' are necessary to relate the sentence meanings at layer 2 with sets of words at layer 1, and that similar 'phonological grammars' appear to be necessary (Chomsky & Halle [1968]) to relate words to their pronounciations, the layer 0 'images'. Transformational grammars are sets of transformational rules that relate sequences of words (at layer 1) with a 'deep structure' at layer 2, and as Chomsky points out, these rules can have considerable structural complexity. The complexity arises from the need to map portions of semantic networks onto linear sentences, so that the semantic content of arbitary network relations in the speaker may be verbally encoded in a kind of 'linearised' form for communication to the listener. Generalised Transformational GrammarsGeneralising now from the linguistic case, there appear to be what I call 'generalised transformational grammars', or 'correspondence rules', that map arrays of nodes at one level with a portion of a network on the next higher layer. The rules must be able to operate independently over the whole network, as we saw in the case of the four-leaf clover. When recognising patterns in lower layers, for example, it must be possible to activate any part of the higher layer whenever a corresponding pattern of lower-layer nodes are activated. The rules must be able to relate to nodes and arcs other than via explicit arcs of a network: they are more akin to programs operating on data structures, than to purely linking structures. They will not be invoked explicitly in any predetermined calling pattern, but are active elements (variously called monitors, demons or production rules) which constantly monitor the current state to see if their characteristic patterns occur. Waterman et al. [1978] includes discussions of such mechanisms for pattern-directed control of inference steps, and Anderson [1983] gives an extensive application of pattern-directed production rules.Some of the apparent properties of these 'generalised transformational grammars' are listed below:

For example, within an activation model, if there is a suitable array of activated nodes at level n, then the corresponding node on level n+1 is activated. Conversely, the sufficient activation of a node on level n will lead to the excitation of corresponding arrays of nodes on level n-1 (if not already activated). The former process is perception or recognition, and the later is the generation of imagery, speech and/or action. Relation to Other TheoriesPDP NetworksRecently there have been attempts (see especially the PDP models of Rumelhart et al. [1986]) to reinstate purely connectionistic mechanisms as sufficient for all cognitive modelling. These purely connectionist architectures are formally complete, in the sense that they can simulate any finitely-specified input/output function. However, the problems of visual recognition, to take one example, require such an extraordinarily large number of nodes and arcs that it is implausible that connectionist processes are actually used. Some deficiencies of the PDP models for language learning are outlined in Pinker & Prince [1988]. Fodor & Pylyshyn [1988] describe how these difficulties arise because the connectionist models do not have any notion of procedure or production rule (unless the architecture is artificially predetermined to simulate them). The PDP models are thus even simpler than the one-layer semantic networks, as they have only nodes and connections, without any production rules to operate on the network.In the case of the relations between an object and its visual appearances under arbitrary translations and rotations, connectionist schemes have been proposed (Hinton & Lang [1985]), but these are schemes which require a number of inter-layer connections which rises as the product of the number of nodes on layer 0 (the number of image 'pixels') with the number of possible transformations. That is because there has to be an explicit arc joining two nodes for every possible logical connection which may be required. The number of connections is in fact so large that Hinton and Lang [1985] do not even include them explicitly in their simulations, but write procedures which connect just those arcs which are relevant to given input configurations. This use of procedures or rules is more in line with the layering hypothesis. There may of course be some explicit arc connections between separate layers, to record facts such as the particular instances of more general concepts. These facts are like the associations of classical psychology, and Lachter & Bever [1988] point out that these must be distinguished from the rules needed to model linguistic competence. For example, such associative facts that particular images arise from a given object, or that particular objects were involved in a given event, may be represented by specific arc connections between those images and the given object, and so on. But this does not imply that the object is specified by those connections. We must draw a distinction between relations of instantiation, as recorded for example in episodic memory, and relations of specification, which define the meaning of a higher level concept sufficiently definitely that new instances can be recognised. Greenwald's 'Levels of Representation'

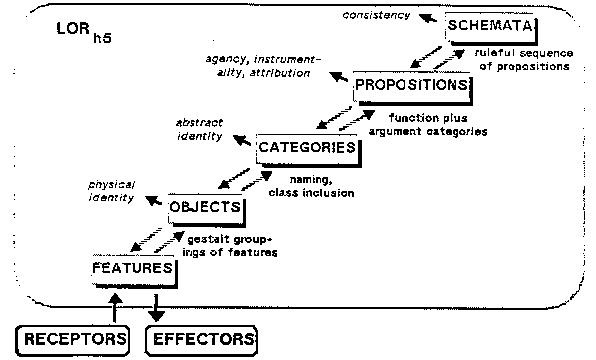

Table 2: Greenwald's five levels of human mental representation (Greenwald [1988]). Greenwald [1988] also postulates a scheme of distinct levels or layers of cognitive representation. His scheme (see figure above) is similar to that of the present paper in the following respects: (a) the approximate number and identity of the levels, (b) the critique that much existing representation theory deals with within-level relations, rather than between-level relations, (c) the relation to Piaget's theory (see section 4.1 below), (d) the assumption of bidirectional inter-level relations, and (e) the distinction between levels of analysis and levels (i.e. my layers) of representation. However, there is one noticeable difference between the layered structure of here and of Greenwald. He focuses on a 5-level scheme of features, data, objects, categories, propositions, and schemata. This is different in that he has categories at a lower level than propositions & case structures, the reverse of the order I propose. This detail is clearly an empirical question independent of the question of the existence of layered architectures. The difference may, however, be more apparent than real. This is because I do have single relations and categories in layer 2 (alongside events). It may be that they form a 'sublayer' that is 'below' the layer of events and case structures as such, but it is clear that there must be another layer (my layer 3) in which there are generalised relations between events and relations, in order to represent numbers, correspondences, and the reversibility of operations. It is this last layer that does not appear to be clearly present in Greenwald's scheme. Bottom-up and Top-down ProcessingOne of cognitive psychology's recurrent hypotheses, as Anderson [1983] notes, is that there are two modes of cognitive processing. One is automatic, less capacity-limited, possibly parallel, and invoked directly directly by stimulus input. The second requires conscious control, has severe capacity limitations, is possibly serial, and is invoked in response to internal goals. The idea was strongly emphasised by Neisser [1967] in his distinction between an early "preattentive" stage and a later controllable, serial stage.Anderson tries to assimilate this distinction to a somewhat similar but not identical distinction, namely bottom-up versus top-down processing, as it is called in computational work. (Bottom-up processing starts with the data and tries to work up to the high level. Top-down processing tries to fit high-level structures to the data.) Lindsay and Norman [1977], in their introductory psychology text, use a related distinction between data-driven and conceptually driven processing. The distinction is quite clear in language parsers, where control can be in response to incoming words or to knowledge of a grammar. However, the distinction is also found in many models of perceptual processing, although more recently the trend is to combine the two processes so that processsing occurs in response to goals and data jointly. The original hypothesis of two modes of processing may be explained as the result of bottom-up versus top-down strategies, but it is perhaps better explained as the distinction between intra-layer and inter-layer operations in a multi-layered architecture. For the operations within one layer are serial, require conscious control, and are limited in capacity to what can be held in short-term memory. The transformations between layers, on the other hand, appear to be automatically invoked with some kind of parallel pattern matching triggered directly by the appearance of the appropriate patterns. The second process does not require conscious control in its detailed operation, and is used at a "preattentive" stage to form the perceptions which do reach conscious attention. If this interpretation is correct, it is then possible for top-down and bottom-up processing (and their combinations) to occur for both the operations within layers, and operations between layers. The parallel construction of perceptions, for example, could be 'data-driven' by the appearance of sensory stimuli, or it could be 'conceptually-driven' such as in the production of images in dreams and hallucinations. Similarly, the serial operations of conscious planning or problem solving (of GPS-like problems) could be 'data-driven' by the succession of partial solutions, or they could be 'conceptually-driven' by explicit goals or strategies concerning what constitutes an appropriate solution. Symbolic ModellingLooking now at what has been achieved in AI work, we see that most successful ventures have concentrated on symbolic manipulations and solving combinatorial problems using production rules within one layer, or on generating lower layers from a given stage.Manipulation of symbols and structural combinations may be concerned with image processing (layer 0), with object relations (layer 1), with simulating sequences of events (layer 2), with classification or number problems (layer 3), or with modelling formal systems (layer 4), but they have very rarely succeeded in relating these different semantic layers in a realistic fashion. It is still possible to generate lower layers moderately realistically, for example to generate images of objects performing various actions, but the recognition problem, of activating a higher layer by the 'correspondence rules', is more difficult. The recognition problem only becomes manageable in environments where the syntax has been systematically specified beforehand, for example in optical character recognition, or in the 'block worlds' of various AI projects. A cursory inspection of the kinds of items in human focal attention shows that there are significant differences between operations within one layer, and operations which relate two layers. We perceive considerable quantities of detail during the successive operations within one layer, and it is possible for us to 'observe' the processes in action, and to guide them by means of explicit rules. A large amount of AI work has involved taking these observed rules and modelling their effects on symbol structures in computers. However, in contrast to the detail we see in the solution of the combinatorial problems that can be posed within only one layer, the transformations between layers seem to occur almost 'invisibly' for us. This lack of detail is deceptive, as the transformations embody a great deal of tacit knowledge (Polanyi [1958], Berry [1987]), no less a quantity, it is claimed, than the explicit knowledge visible in transformations within one layer. This has the consequence that the AI problems of perception and pattern recognition are difficult for us to program, in contrast to solving combinatorial problems: we cannot see the details of our own inter-layer transformations, and so cannot write down simple rules to describe them. A 'Strong Layering Hypothesis'As mentioned in section 2, it is possible to keep each layer as a pure connectionist network, and to regard all heuristic rules either as simple associations, or as generated by means of the inter-layer transformations. In this way, no special category of 'heuristic rules' need to be represented in the network structure of each layer. Production rules, if they are true rules and not merely fixed associations, would then be always in a different layer from their data.We would then regard rules as simply two-node fragments of the next-higher layer, in the following way. Call these two nodes in layer n+1 as p and q, say, with the rule being p -> q. After the initial p node is be activated by an appropriate pattern in layer n, it feeds its activation to q, which then generates a second pattern of activity in the lower layer n. In this way, arbitrarily complex production rules p -> q for a layer ncan be generated by suitable combinations of the inter-layer transformations described previously. There is now no need to distinguish 'rules' from 'data' in each layer, and no need for any specific interpretive mechanisms beyond what is already necessary to link the layers together. If this 'strong layering hypothesis' were correct, there would be interesting implications concerning the status of heuristic rules that are currently used in many branches of cognitive modelling. The implication is that there are no specific heuristic rules. There are associations in episodic memory, which sometime guide searches heuristically, and there are inter-layer transformation rules. There is a certain phenomenological plausibility to this hypothesis. If we are asked to manipulate images (layer 0) in a certain way, for example, we tend to think of them as objects (layer 1). After then manipulating those objects, the images are then regenerated from the new imagined appearance of the objects. There may still of course be associations between images, but these are based on historical connections, and do not have the generality of application that is characteristic of rules. Similarly, if we are asked to manipulate objects (layer 1), it is plausible that we do so by means of imagined actions (layer 2) performed by those objects (e.g. "object a wants to move from x to y"), rather than by abstract move predicates such as move(a,x,y). Whether these implications are in fact true must be the subject of empirical investigation. According to this stronger hypothesis, there would be an almost complete reversal of the roles of rule-based and associative processing. For now the network associations within a given layer need not be 'massively parallel', but would be regulated so that they operate in a memory-limited fashion requiring conscious control, with a parallelism of at most 2 to 10 processes. Futhermore, the rule-governed processes, which now operate between layers, would not act according to conscious serial control, but in an automatic parallel manner as in Anderson [1983]. The principal justification for this counter-intuitive reversal is that, for reasons given above, the connections between layers must have the generality of rule-based transformational grammars, and that once this is granted, there need be only simple and limited associations within each layer. In contrast to the PDP networks proposed for visual and linguistic processing, there can now be a much closer correlation between the active nodes in a layer of the network, and the psychological items in short-term memory. Examples of LayeringPiaget's Cognitive StagesIn attempting to describe the phenomena of mental development in children, Piaget ([1926], [1962]) has distinguished five broad stages. These are first the sensorimotor stage (ages 0 - 1), followed by the preconceptual (2 - 3) and intuitive (4 - 6) stages. (The preconceptual and intuitive stages together are called the 'preoperational' stage.) Then come the operational (7 - 11) and formal (12 - 16) stages, while the 'creative stage' (ages 17 - ) is a sixth stage postulated by Gowan [1972] as an extension of Piagetian theory. Assuming knowledge of the basic theory, I will briefly outline how these divisions arise within the layered architecture outlined above.Table 3: Relation between Network Layers and Piagetian Stages

Sensorimotor StagePreoperational StageThe 'preoperational' years involve building up nodes at layer 2 representing events and episodes as single entities. Procedures are built up to recognise such events while observing successive positions of objects (at layer 1), and to use subject-object sentences to express these features linguistically. In this stage, the child begins to see relations between objects, and can use categorising words (such as colours, shapes etc.) though not systematically. The child still can only imagine one relation at a time, so he cannot examine his own consistency over time, cannot see one-to-one correspondences, and cannot see a series as a whole.Piaget's observations of conservation vs. non-conservation can be explained on the layering hypothesis, if the child is beginning to have concepts of relations such as 'more' or 'less' in the layer 3, but not the general coordination of these concepts among themselves within that layer. This means that there appear to be procedures for recognising and naming the given concepts as nodes in a nascent layer 3, but these nodes are like isolated islands, and not as connected among themselves as they will be in the next 'operational stage'. Operational StageThe child can now imagine reversible operations, 1:1 correspondences, and series of relations, so he can come proficient in operations with group structures (such as rotations, reflections), matter conservation properties, classes according to property abstractions related as elements in a lattice, and numbers.These abilities seem to start with the ability to see relations of relations, even if only of objects actually or recently present. The name 'operational stage' does not mean that this is the first stage for recognising operations and relations, for these are recognised as single events in the preconceptual stage. Rather, this is the age at which there are explicit relations (e.g. of 1:1 correspondences & numbers) between operations and relations themselves. Formal & Creative StagesThe final layers 4 and 5 were designed to correlate with Piaget's 'formal' stage, and with Gowan's [1972] proposed continuation with a further 'creative' stage, but the details here become less specific. What is known can be summarised by postulating first a distinct layer 4 in which whole sequences of abstract plans can be formulated and explored. concerning what exists. Gowan's extension calls for a stage with the ability to formulate meta-theoretic notions, to think about theories, and discuss the meaning, interpretation and application of formal theories as if they were individual cognitive entities in an additional layer '5'. These processes enable planners to figure out what the goals should be in a given situation. The issue of 'goal detection' has long been realised (Wilensky [1983]) as a central issue in planning and problem solving.Layering in Symbolic AI SystemsThe idea of layers related by procedures arises naturally when considering the classification of data (Rendell [1985]), the comparisons of 'macro-operations' (Iba [1985]), and when reasoning about programs (Rich [1985]). When simulating a physical system, it is often efficient to simulate the system at several layers alternately. VLSI circuits, for example, may be simulated variously at the electrical, gate, Boolean and functional levels, crossing between the levels according to where current attention is focused.For the representation of more general kinds of knowledge in semantic networks, there have been a number of formalisms which use distinct layers: Sowa's Conceptual GraphsSowa [1984] has proposed a comprehensive formalism for representing and manipulating 'conceptual graphs', which are the components of a semantic network which represents distinct propositions or facts. The conceptual graphs can be part of layer 1 structures if they describe the properties of objects, or part of layer 2 if they describe actions by means of a node with a verb label. It is recognised that classifications and numbers form a distinct 'type hierarchy' layer, and have to be specifically defined within the formalism. Specialised 'actors' are defined to perform arithmetic within the network of numbers, but there are no actors or procedures, for example, to count nodes or arcs elsewhere in the network, and hence have some general notion of the meaning of say 'three'.Sowa surmises that 'sensory icons' (layer 0 entities) are classified by an 'associative comparator' (searching long-term memory) into 'percepts', which are then assembled into 'working models' (perhaps better called 'perceptual graphs', after Morton et al. [1987]). However, no guidance is given as to how such processes as translational invariance, varying illumination and partial blocking are to be incorporated into the associative comparator. That is, although there is an extensive library of operations within a given layer, they do not enable the production or recognition of networks in adjacent layers. Hybrid Reasoning SystemsIf a single inferential component is inadequate for an entire knowledge representation task, it is sometimes possible to combine several reasoners into a complex whole. In KRYPTON (Brachman et al. [1985]), separate inference mechanisms are used for terminological and for assertional reasoning. Another system KL-TWO (Vilain [1985]) uses separate subreasoners for propositional and for quantificational reasoning. These hybrid systems illustrate how separate inter-layer correspondence mechanisms may be made to work together. In the KRYPTON framework, for example, the terminological 'layer' deals with classifications in our layer 3, whereas the assertional 'layer' could be confined to deal with assertions of subject - action - object sentences.Meta-level InferencesIn solving some search problems in mathematical problem solving, Bundy ([1983], [1985]) has shown that it is often effective to search at a next-higher 'meta-level', rather than search among all the possible recombinations possible at the 'object level'. Searching at the meta-level is to search among different strategies for solving the original problem for that which can be applied, and this is equivalent to searching among the concepts among the next-higher layer for that pattern (or 'perception') which best describes the original problem.Use of Dynamic Models in Object RecognitionFor recognising objects in a scene, such as in the 'bin-picking' problem, one approach (Porrill et al. [1987]) requires a detailed model of objects and sets of objects in three-dimensional space. This model can be used to generate plausible images of objects, and the recognition problem can be regarded as that of choosing the translational and rotational parameters used for the generation. This approach has been generalised (Sullivan [1986]), to use a dynamic model of how the objects may be moving around in the scene. That is, a time succession of images is collected and examined, and moving objects detected first by successive comparisons. They can then be classified according to prior knowledge in the model of typical movement patterns.This generalised method amounts to using layer-2 information about events and movements to constrain the search for layer-1 concepts of objects which are to be detected from layer-0 images. The success of this method reinforces the hypothesis that successive inter-layer transformation mechanisms operate simultaneously in a cooperative manner. Particular and General Inference Rules for 'Frame' TransformationsIn his 'frames' paper (Minsky [1975]), Minsky discusses some of the inference rules for the manipulation of frame schema for three-dimensional objects in space. He recognises (p. 229) that these are similar to the 'concrete operations' of Piaget and Inhelder [1956], and that general inference rules for frames require a new 'formal stage' which enables children to be "reliably able to reason about, rather than with transformations" He suggests that 'formal operations' are "processes that can examine and criticize our earlier representations (be they frame like or whatever). With these we can begin to build up new structures to correspond to 'representations of representations'." According to the layering hypothesis, it is by such 'second-order' processes that concrete operations (at the layer 3) can become abstracted to formal operations. The layer of formal concepts is then associated by inter-layer procedural mechanisms with patterns of concrete operations, and allows the monitoring and construction of new operations by means of general rules of a formal nature.Anderson's ACT* SystemIn this system, Anderson [1983] concentrates principally on the production rules for particular problem domains, in particular temporal sequences, spatial images, and abstract propositions. All three kinds of representational types are allowed in his system, but he does not consider the possibility of a layered structure to bring these domains into a general framework. If the arguments of the present paper are correct, then the construction of transformations between Anderson's representations will be a non-trivial problem. It should be particularly difficult between the propositional types and the other two, as propositions, on the present scheme, form layers 2 and above, whereas temporal sequences and spatial images form layers 0 and 1 (depending on whether they are 'raw' images, or arrangements of objects).It is notable, however, that even for his restricted problems, Anderson has found it useful for psychological realism to postulate that the pattern-matching mechanisms for many different production rules all operate in parallel. In fact, he has the rate of the pattern-matching processes proportional to an 'activation level' for the different rules. Although it is time-consuming to simulate these parallel matching mechanisms on a serial computer, such fast mechanisms appear to be necessary. According to the layering hypothesis, such parallel transformation mechanisms are operative between each pair of adjacent layers. Semantic Networks for Language ComprehensionInput to the semantic networks in AI systems is much easier if language sentences are used, as semantic nets are designed to easily relate to natural language (see Quillian [1968/1980], Simmons [1973], and Schank et al. [1977]). This relation is a simple example of an inter-layer transformation. Sentences, however, have been designed to carry remnants of their originating semantic structure, so it is not surprising that detecting this structure is easier than recognising, for example, the truth or falsity of the sentence by looking at the physical world. Furthermore, as Kobsa [1984] points out, the 'Pidgin English' labels of the nodes and edges of semantic nets are somewhat deceptive, as they make the net seem natural to us, though the net should be able to function in many cases without verbal labels if it is to define all meanings purely from its relational structure. The 'conceptual dependency' graphs of Schank [1973, 1975] are an attempt to function in this more fundamental 'word-less' manner.ConclusionOne plausible method of combining connectionist systems and rule-governed symbol processing has shown to be the combination of connected networks in layers. I have argued that there are transformation rules, with complexity similar to that of Chomsky's grammars, between each adjacent pair of layers in a multi-layered network. This means that the possibility of what may be called 'generalised transformational grammars' is presupposed not only by language acquisition, but also by many stages of cognitive development. Evidence for these claims are presented from developmental psychology and examination of the achievements and problems of computational modelling of knowledge representation.References

Physics Department, University of Surrey, Guildford GU2 5XH, U.K 1990. | ||||||||||||||||||||||||||||||||||||||||||||||||

|